In fiction we tend to categorize intelligence linearly. Someone is smarter than someone else and that lets them do more smart person things. Smart person things generally look like competent person things except they use words that the script writers think sound impressive. Oh and success. Being smart means being successful. All the time. First try.

In my experience intelligence isn't just non-linear, but it's down right non-transitive. You can't order people and you can't predict when someone will be competent because of their intelligence unless you've seen them do exactly the same thing before.

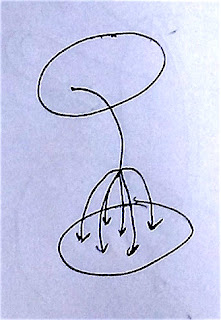

I'm going to explore what I think is going on.

How do we know

How do we know things? Like, if you see the number '4' written on a wall, then how do you know what it is and how do you know what it's good for?

- Peer Pressure

- Gut feeling / experience

- Structural analysis

Peer pressure is relying on groups of people and society's expectation in order to decide what to do. While we tell children that you shouldn't listen to peer pressure, what we actually teach them is that you should totally listen to peer pressure (just try not to listen to stupid peer pressure ... unless it's coming from arbitrary authority figure). This doesn't have to be a bad thing. For example, learning to talk comes from peer pressure.

Gut feelings and/or experience. When talking about getting out of dangerous situations, people will often talk about listening to their gut. They can't put their figure on exactly what was wrong, but they say that their gut told them something bad was going to happen. Similarly, we also talk about sufficient experience to do a specific job. If it was just about rule memorization, then anyone with a sufficiently large database on a portable device could do any job (open heart surgery, world class composer, commander of an army). We don't do things like that for a reason. We want people to have this vaguely defined 'experience'. We know it when we see it and we can't describe exactly how we did it. (Although we are good at telling stories.)

Structural analysis more or less reduces to mathematics. However, it can also be algorithms. Really anything that we trust to mechanically work out. So for example, adding very large numbers together. We might be able to use our gut reactions to know if the addition went wrong in any dramatic way. However, for the most part we're just trusting the elementry school techniques that we learned to just work. It's not all mindless though. For example, if you're adding 398 to 455, then you can structurally check the answer by saying something like, "Both numbers are lower than 500 and 500 + 500 is 1000. Additionally, both numbers are larger than 300. And 300 + 300 is 600. Therefore whatever answer we get had better be between 600 and 1000." It's a creative application of logic, but the end result can function in a mindless fashion if it has to.

I missed one way to know things. We're more or less going to skip it, but we can talk about it first to see why.

Knowing things supernaturally

My list above has a zeroth entry. Supernatural knowledge. We're going to leave this one along though because things get hopelessly unknowable pretty quickly.

God

First of all, maybe God is giving you knowledge. Some people like to theorize that maybe God is evil. But this is pretty crazy and fatalistic thinking. Like, you can't even kill yourself in the reality where God is evil. God can just bring you back. Heaven can switch to Hell at a moments notice and your entire life experience might all just be an illusion from God. People who see evil, suffering, and injustice in the world and conclude that God is evil are either suffering from a woefully inadequate imagination or they're just trying to spite God. The evil God scenario is so much worse than the evil we see in the world today. So we're going to say that God is good. If only because if God is evil then nothing else in this essay matters. The only thing that matters is the eternal suffering that we're all going to definitely go through forever.

So God is good then you're going to get good knowledge from God. Unfortunately, this does not mean that you can trust people who say that they're getting knowledge from God. For starters, someone gets knowedge from God and shares it with others. This person is going to be treated favorably by their peers. After all they're giving everyone good knowledge from God. Other people will see this and desiring to get both preferential treatment and have their personal ideas implemented by others, they will lie to others and tell them that their personal knowledge is actually God's knowledge. Just because you see someone saying that they are getting knowledge from God doesn't mean that you can trust them. Of course, there are other considerations.

Not God

Supernatural knowedge can come from God. But it can also come from supernatural sources that are not God. While we pretty much have to assume God is good if we want anything else to even matter, we don't have to assume that not God is good.

Like before we have to be concerned that a supernatural knowledge source might not actually be supernatural, but instead someone only pretending to have supernatural knowledge. But we also have to worry that someone does have supernatural knowledge which is coming from a malicious source. And then things get complicated.

What happens if a supernatural source can track what happens to the knowledge it gives before it gives it. In much the same way we're able to statically track data using sophisticated type systems, the supernatural source can ensure that it only gives out good knowledge when that knowledge only reaches the "true believers" of the supernatural source. Feel free to exhange "true believers" with any property including ones that are not computable or even down right nonsense (the supernatural source may be able to handle it using techniques not available to us in the natural world). If this is going on, then we really have no way to evaulate knowledge from a supernatural source. There's no way to for us to make sure that only "true believers" get the knowledge (and who knows why kind of criteria a supernatural source is even looking for). And besides if we have to believe in it to get it, then we can't skeptically analyize it.

But if we think about it, things can get even worse.

The ghosts are messing with us

All interviews with psychics that I have seen featured a psychic that was pretty clearly using a cold reading technique. Cold reading is a way of way of fooling people into thinking that you have special powers by saying random things that are statisically likely to be true until you land on something that is actually true. Or even just true enough. People like to agree in groups of people because peer pressure is a legitimate knowledge method. Either you're agreeing to try and leverage a good reputation for yourself or to avoid a bad reputation. Or you're agreeing because your current peer group is telling you that this is the right course of action.

Now what happens if the psychics aren't cold reading, but actually talking to ghosts. And the ghosts are the ones doing the cold reading. It would look the same as if the psychic was the one doing the cold reading. Given both possibilities we can't decide between them for sure.

You put it all together and while this can be a legitimate knowledge source, we really can't analyze it very well. There are too many places for something else to be going on.

From knowledge to thinking

The final problem with supernatural knowledge is that it's really not obvious what it means to think supernaturally. With peer pressure, experience, and structural knowledge we can extend all of them into a mode of thought, but what would supernatural thinking look like. Elisha was a prophet of God and he worked a bunch of weird miracles. He purified some soup by throwing in flour. He got an axe head to float in water by throwing in a stick after it. He cured a soldier of leprosy by having him take a bath in a river. Now maybe the bible doesn't record the part where he went off and prayed, but it looks suspiciuosly like he just said some stuff and then God followed through with a miracle. Moses was similar. Sometimes he prayed to God for what he should do, but sometimes he just said stuff and then God did stuff.

So, it looks like supernatural thought processes are a thing because Elisha and Moses were clearly doing something like it, but of intellectual analysis I think the only thing we can really say is, "what's your relationship with God look like."

Peer Pressure

Some problems are really big and in order to build up any intuition for how to deal with them you would have to live multiple lifetimes. For example, how do you encode arbitrary knowledge into a serial format that can be transmitted via accustical waves (that is how do we talk). Peer pressure allows us to construct language and it comes to surprisingly good conclusions. If you look at different knowledge domains, you see pretty much the same thing over and over. People taking complex ideas and abbreviating them with technical language. Jargon. This is actually a concept from information theory. Often used symbols need to have short representations. Pretty much every social group in the world comes up with their own jargon.

Additionally, some domains don't allow failure. Consider hunting a lion or being a soldier. If you mess up you're probably not going to live. And you can't really learn from the lesson that kills you. However, your peer group can learn from that lesson. And they can pass the information on to people who weren't even present when the fatal event occurred.

Statistics and reputation

This is a statistical method of thinking. The group notices many actions. The group comes together and talks about the actions they've noticed. Communally they decide what the right way of acting is. The process then cycles continuously. Whether or not the peer pressure results in beneficial outcomes is going to be left up to chance. A peer group with enough cycles in it will tend to give better results. And partially this is because if the peer group consistently gives bad results then it very well could die off before it gets very many cycles into the process.

Reputation also plays a role. We listen to people who have good reputations. Or at least we listen to people who seem to act like they have a good reputation. Embarrassment comes into play. If you make a statement, then follow through with action, then fail, then you will get embarrased by the group. They are less likely to listen to you next time. Also you have seen your own fallibility. You're likely to act less sure and more apt to be humble. Over time repeated success makes you look good and repeated failure makes you look bad. This causes the group to weigh your opinions higher or lower. And it causes the group to warn itself about your opinions.

Propogation speed and irrelevance

The peer group isn't going to know everything. There are going to be some low probability events that the peer group has either no frame of reference for dealing with or an incorrect frame of reference for dealing with. Different peer groups that come together and inadvertantly share knowledge (old war stories or my dad can beat up your dad stories) will sometimes transfer knowledge about low probability events. And sometimes the transfer will fail. But all of this takes time. The peer group can only transfer knowledge at the speed of failure plus communication. And only when the information is actually accepted.

Of course the peer group will only work while the context that makes it effective is true. Things change sometimes. If the change is slow enough, the new context is comprised of low probability events, and if failure is non-lethal enough, then the peer group might survive and change to match the new context. Or it will die off or become irrelevant.

Why and why not

Peer pressure can work really well for solving difficult problems. It can also solve problems that don't have any necessary component in the solution. For example, there are many different languages in the world that all work very well for conveying information. Additionally, there are many different ways of greeting people such that they do not view you as a dangerous threat. It's all different and there's no way that any of it had to be. We just needed some method.

Peer pressure takes a while to converge into a stable enough pattern that it can be relied upon. And it doesn't react very well to significant change. The knowledge is also distributed. Which helps it survive, but also means that understanding the whole picture can end up impossible.

Probably the biggest problem is that peer pressure recognizes appearance over actuality. An individual who learns how to appear like someone with a good reputation may be accepted as someone with a good reputation. An individual who is willing to lie about good and bad actions has to be listened to or rejected by the group. The group can make a mistake. And the group may also decide to ignore good information by someone who does not know the right way to present themselves or their idea.

Experience

Peer pressure is a statistical method that is distributed across many people. Then experience is a statistical method that is centered on one person.

Much like peer pressure, your experience relies on your failures. Peer pressure relies on some sort of social introspective to collectively occur and that can take a lot of time as can the cycle between experimentation and introspection. Experience can benefit from an introspective phase, but you can also introspect subconsciously. And cycling between failure and introspection can happen very quickly.

While peer groups can be influenced negatively because of the messenger's social abilities (either accepting bad messengers because of good social skills or ignoring good messengers because of bad social skills), your experience can instead look at raw evidence. Of course you can always lie to yourself or have hidden biases, but at least you have direct access to your experiences.

Still statistics

Your experience is still a statistical method just like peer pressure. This means that there are going to be cases where your experience tells you that something is right when that thing is actually wrong. Again, more cycles where you think that you know the solution to a problem, attempt to solve the problem, and then success or failure, will tend to produce better results.

However, too many cycles can also cause problems if your experience gets overfit for a specific scenario. You might become hypercompetent at the scenario, however it can be difficult to accept that your experience is not valid for other scenarios that do not share enough aspects of the one which you are overfit for.

Stories

Because experience is just a statistic method that your brain runs, it can be very difficult to convey to other people why or how you know the correct answer. Or even convey why something is a correct answer. The most extreme examples are when people report getting "a bad feeling in their gut" about a scenario. They admit that they don't know why they needed to get to safety, but they knew that it was necessary.

As a result, if you want to share your experience with a peer group, then you'll need to be good at telling a story about your experience. This could mean that you are literally a good story teller or it might just mean that you know how to make the story acceptable to the peer group. The story itself functions as security of reputation to the person who accepts it.

If someone accepts your experience but then fails when attempting to apply it, they will need to find a way to protect their reputation in front of their peer group. If they don't have a good story, then they could easily lose their reputation when their peer group is not satisified. Making bad decisions without good insurance might indicate other flaws, better to be safe and ignore you in the future. However, if you have a compelling story, then the blame can be shifted to chance and happenstance.

Why and why not

Experience is going to work best when you're in a scenario where you can maintain a quick cycle between introspection and experimentation. Additionally, failure needs to be cheap and not fatal. In these scenarios you can quickly build up experience for very complex problems that a peer group would fail to be able to solve on its own.

In order for this to work effectively, you need good instincts for the problem. Or at the least be able to build up good instincts quickly. Repeated failure may eventually cost too much or end up with reputation damage.

Additionally, if the scenario has failures that look like success but are actually slow failures, then experience will tend to send you in a direction that only makes you successful in the short term. In the long term things will collapse. Experience will probably not help you break out of that cycle. For that you need to be able to recognize a causal connection between events. For that you need some sort of structural thinking.

Structural

Mathematics is kind of weird. You pick a set of axioms and a set of inference rules and then you try to see what's possible in that space. Some things are believed to be false or true and others are proved to be false or true. It isn't always possible to map back to reality.

I like the term 'structural' more than I like the term 'mathematical' because this mode of thinking can sometimes have a tenuous relation to mathematics. Algorithms like long division or what you put into a computer can modeled mathematically, but sometimes the formal mathematical modeling is overly complex and a naive approach can be more than sufficient. Sometimes just being aware that causality is a thing (that is things cause other things to happen) can be enough to get structural knowledge or do structural thinking.

Not really statistics

The best thing about structural thinking is that it escapes the statistical trap that peer pressure and experience can both fall into. You have axioms and you have inference rules (in some form or another) and all you have to do is map them to your problem and you can get correct answers. This process is also often very easy to encode into a computer (at least compared to peer pressure and experience).

There are still avenues for failure though. Mathematical mistakes happen and bad assumptions about the applicability of a structure to a real life scenario can occur. However, regardless if you have a success or a failure, the structural mode carries along with it an explanation for how you arrived at your destination.

Real explanations

A structural solution is going leave behind a trail that can be examined later. If things are more mathematical in nature, then this trail will look like a formal proof. If they are less mathematical, then it might look like a sequence of justifications, a series of differences, or a path that was traveled.

Regardless, the trail can be checked. Either it can be checked to try to understand why it worked correctly or it can be checked to find where the flaw was that caused it to fail.

Why and why not

Escaping statistics and providing clues as to why a specific structural thought works or fails is the most compelling aspect of structural thinking. As long as you're matching up the structure to reality correctly, then you should always succeed.

The downside though is that sometimes the structure you have doesn't fit the scenario you find yourself in. Additionally, building new structures can sometimes take a very long time. There are some theorems in mathematics that have taken hundreds of years to solve.

Additionally, figuring out how to get a peer group to accept a structure can be very difficult. Just because there's a trail that shows why something works, doesn't mean that there is a story that is acceptable to the group. And if the structural approach is exotic or different enough from the status quo, then it may be very hard to find any allies that have experience with your approach.

Some here some there

Everyone does all of these. At the very least to some extent. To learn how to talk, you have to be able to act on the peer pressure of those teaching you the language (at least for your first language). To learn how to walk, you have to build up experience from repeated failures. And to have any sort of ability to navigate failures with causes that have any sort of significant delay in their manifestation, then you have to be able to create some type of structural connections.

Having capability doesn't imply competence or favorability. I think that each individual is going to favor different thinking modes for different aspects of their lives. Some might specialize in a single thought mode across the board, while others might mix and match depending on the situation.

I've found that for myself I tend to use peer pressure for dealing with shallow social interactions and structural thinking for dealing with deeper social interactions or social interactions that might lead to action or commitments on my part. For problem solving, I prefer to use structural thinking. My experience type of thinking for problem solving tends to lead me to incorrect answers if I don't already have a structural framework for the problem in place. This makes a lot of problem solving frustrating for me until I'm able to figure out a structure, but it also accelerates finding that structure because I'm good at finding the edge cases and inconsistencies. On the other hand I am able to form good intuition around problems involving physical motion or kinematics.