Early on in my investigation into cognitive complexity, I noticed that you couldn't necessarily separate the difficulty of understanding a concept from the other concepts that interact with it in the system that you are concerned with. This was a bit surprising because before that I had done a bunch of work with type theory, lambda calculus, and functional programming. In all of these fields being able to compose functions such that the internals are hidden is a major and recurring concept.

I didn't immediately realize that it should be part of my development of problem calculus, but I set it apart to be examined later. However, eventually I realized that separating concepts into different modules, objects, or functions (to take a programming perspective) doesn't necessarily make a concept easier to deal with. Sometimes the complexity of a concept leaks. I noticed that my previous idea already stated this exact concept. And thus Complexity Projection was born.

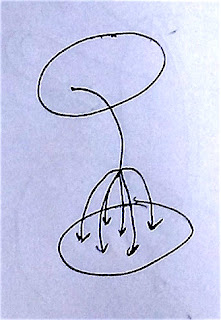

We've already encountered arrows in the previous problem calculus diagrams, however this arrow doesn't indicate a transform from one space to another. This arrow indicates the complexity from one space leaking into another space.

Currently, my assertion is that complexity leaks in the following manner.

The destination space will leak complexity into the output space for any given arrow. The output space will leak complexity into the input space of the related arrow. The input space will leak complexity into the source space. And finally the source space will leak complexity into any arrows that map it to other spaces.

Leaking complexity basically means that if one thing is difficult to deal with cognitively, then anything that the concept leaks complexity into will also be similarly difficult to deal with cognitively even if it is otherwise simple. The term I've been using for this leakage is complexity projection.

For justification about the direction of the projection, consider the previous blog post about invalid elements. If you have an output space with no invalid elements, but the destination space has many invalid elements, then the output space is going be harder to handle because it will admit elements that do not work with the space it is a subspace of. For a contrived example imagine the function "f(x) = x + 1". The output of this function is any number. Now imagine that the destination space is the set of all odd numbers. Because the destination space only lets you have odd numbers, the function output space is harder to deal with because you have to make sure that you do not clash with the requirements of the destination space even though it is not a requirement for the output space.

Similarly, the output space projects complexity onto the input space because a set of valid inputs will need to walk on eggshells to avoid failure from the set of invalid outputs. The same principle again arises when projecting complexity from the input space to the source space. And finally any arrow that goes from one space to the other will have all the complexity from the entire system projected onto it. Or in other words, arrows between two spaces are hard to use because the spaces themselves are hard to comprehend.

As an example of this principle consider compilers. A compiler is an arrow that transfers an element from the space of strings (ie source code) to the space of binary executables. The space of strings is actually really simple. It's the set of all possible character combinations. However, the input space is already highly constrained. It has many invalid elements (ex invalid parsing tokens) and the space itself is highly semantically heterogeneous. There are many different concepts that all get mashed together in the source code. The input space projects its complexity onto the source space. Similarly, the output space also has many invalid elements (ex ill typed programs). This also projects complexity. Finally, the whole system projects onto the arrow (ie the complier). So we shouldn't be surprised that compilers are hard for beginners to get used to even though the input (just a bunch of words and brackets) doesn't seem to be innately complicated.